Secure Databricks Serverless Compute environment

When developing FFA TITAN 2.0, our managed cloud-native data platform, we made a strategic decision to shift from Azure Synapse to Azure Databricks. One of the core features for our clients is the ability to perform SQL-based data analysis. That's why FFA TITAN 2.0 has Databricks Serverless SQL Compute enabled by default.

Our platform is ISO27001 certified. That means we have strict security requirements. All data in motion must remain within the Azure network and not traverse the public internet. To achieve this, we configured Azure Databricks with VNet Injection and deployed other platform components in a similar fashion. Shielding them from public access.

However, deploying Databricks Serverless SQL Compute and enabling it to connect to other FFA TITAN platform resources in the platform's vnet required extra steps. In this blog, we’ll walk you through how we automated this process.

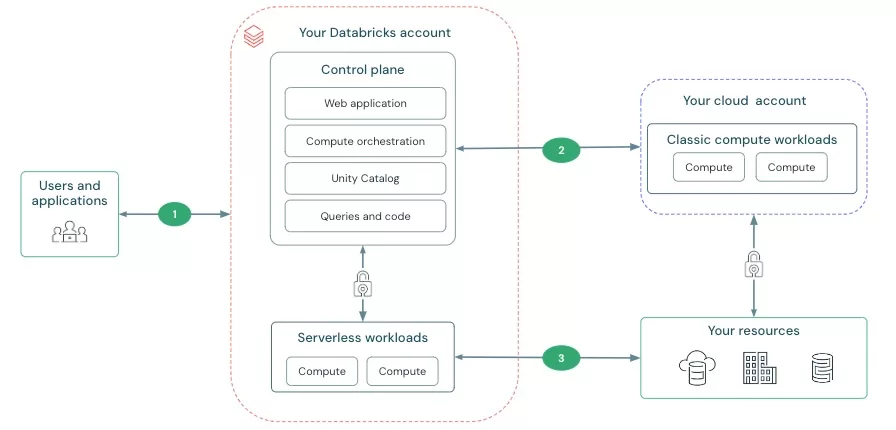

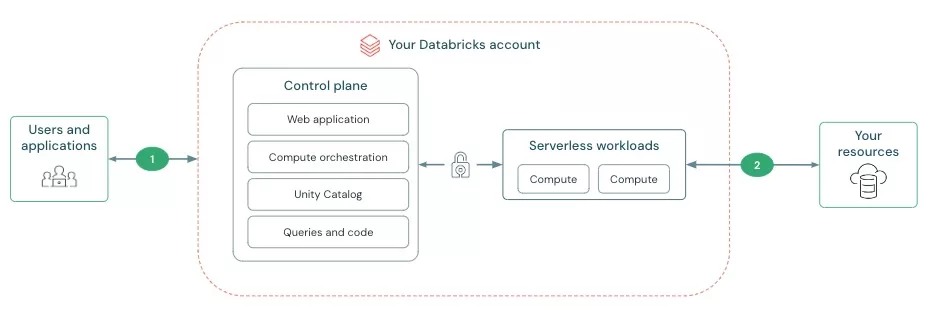

Azure Databricks operates out of a control plane and a compute plane. Where the latter comprises of ‘Classic compute workloads’ and ‘Serverless workloads’.

We identify three distinct flows:

In this blog our goal is to secure and privately connect the serverless compute plane and our recourses

Databricks Serverless SQL Compute is a fully managed service that allows users to execute SQL queries without the need to manually manage clusters. It's optimized for interactive SQL workloads and scales automatically based on demand.

VNet Injection allows you to securely deploy Databricks in an Azure Virtual Network (VNet). This ensures that all traffic between Databricks and other Azure services (like storage accounts or databases in the same vnet) go through the Azure backbone. This avoids exposure to the public internet.

In classic compute workloads, Databricks clusters run within your own cloud account using VNet Injection. This allows you to securely access Azure resources like Data Lake or SQL Databases via private endpoints.

However, Serverless SQL Compute operates in a Databricks-managed VNet, which means it doesn’t have built-in access to your private resources out-of-the-box. This limitation required us to configure a private network connection to ensure secure access to our platform’s resources.

Fortunately, Azure Databricks uses a similar network setup as Azure Data Factory, which allows the use of managed private endpoints. These endpoints create a secure connection between the Serverless SQL Compute managed network and other resources within your Azure environment.

Using Terraform we were able to automate the process of setting up Databricks Network Connectivity Configuration (NCC), establishing and auto-approving the required managed private connections, and binding our workspace. In the next section we’ll show you how to set this up using Terraform.

We want Databricks Serverless SQL Compute to securely and privately connect to our azure data lake gen 2 storage account. Enabling it to perform queries on external tables in Unity catalog. Before we start, let's get clear what FFA TITAN 2.0 platform-components are involved to reach our goal:

| # | Platform component | Location | Public access |

| 1 | FFA Titan azure data lake storage gen 2 account | FFA Titan Azure VNET | Disabled |

| 2 | Databricks Serveless SQL Compute | Databricks Managed VNET | Disabled |

To achieve secure and private connectivity between [1] and [2] we need to achieve the following in our Infrastructure-as-Code:

| # | IaC-goal | language |

| 1 | Create Databricks NCC in Databricks account | terraform |

| 2 | Create blob-private-endpoint in [1] | terraform |

| 3 | Create dfs-private-endpoint in [2] | terraform |

| 4 | Associate Databricks NCC with Databricks workspace instance | terraform |

| 5 | auto-approve managed private endpoint on platform-component 'FFA Titan azure data lake storage gen 2 account' | terraform / powershell |

This step creates an NCC that governs private endpoint creation and firewall enablement.

resource "databricks_mws_network_connectivity_config" "ffa_titan_ncc" {

provider = databricks.accounts

name = "your-databricks-ncc"

region = var.location

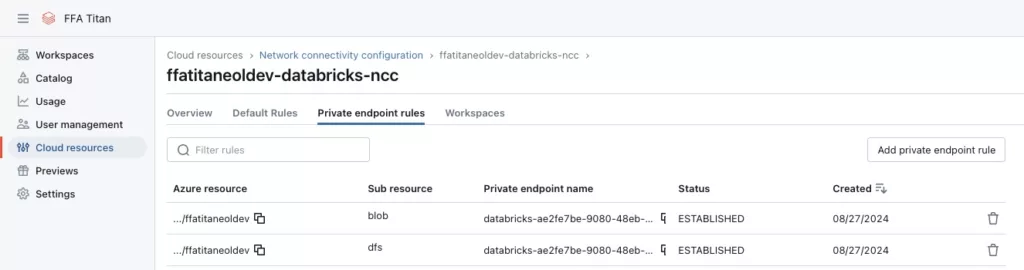

}This creates a private endpoint rule for blob storage on our Azure Data Lake.

resource "databricks_mws_ncc_private_endpoint_rule" "ffa_titan_ncc_per_blob" {

provider = databricks.accounts

network_connectivity_config_id = databricks_mws_network_connectivity_config.ffa_titan_ncc.network_connectivity_config_id

resource_id = azurerm_storage_account.ffa_titan_adls_gen2.id

group_id = "blob"

}This creates a private endpoint rule for DFS on our Azure Data Lake

resource "databricks_mws_ncc_private_endpoint_rule" "ffa_titan_ncc_per_dfs" {

provider = databricks.accounts

network_connectivity_config_id = databricks_mws_network_connectivity_config.ffa_titan_ncc.network_connectivity_config_id

resource_id = azurerm_storage_account.ffa_titan_adls_gen2.id

group_id = "dfs"

}Link the NCC to our Databricks Workspace to enforce private connectivity.

resource "databricks_mws_ncc_binding" "ffa_titan_ncc_binding" {

provider = databricks.accounts

network_connectivity_config_id = databricks_mws_network_connectivity_config.ffa_titan_ncc.network_connectivity_config_id

workspace_id = azurerm_databricks_workspace.ffa_titan_databricks_workspace.workspace_id

}This step ensures the automatic approval of the private endpoint connection.

resource "null_resource" "approve_synapse_dfs_private_endpoint" {

triggers = {

always_run = timestamp()

}

provisioner "local-exec" {

command = ".'<your-powershell-script>.ps1' -azureSubscriptionId '${var.subscription}' -azureResourceGroupName '${var.resourcegroup_name}' -azureResourceId '${azurerm_storage_account.ffa_titan_adls_gen2.id}'"

}

}param (

$azureSubscriptionId,

$azureResourceGroupName,

$azureResourceId

)

$azureTenantId = Get-ChildItem Env:ARM_TENANT_ID

$azurePrincipalAppId = Get-ChildItem Env:ARM_CLIENT_ID

$azurePrincipalSecret = Get-ChildItem Env:ARM_CLIENT_SECRET

# connect to tenant

az login --service-principal -u $azurePrincipalAppId.Value -p $azurePrincipalSecret.Value --tenant $azureTenantId.Value

# select correct azure subscription

az account set --subscription $azureSubscriptionId

# approve pending managed private endpoint

$text = $(az network private-endpoint-connection list -g $azureResourceGroupName --id $azureResourceId)

$json = $text | ConvertFrom-Json

foreach($connection in $json)

{

$id = $connection.id

$status = $connection.properties.privateLinkServiceConnectionState.status

if($status -eq "Pending")

{

Write-Host $id ' is in a pending state'

Write-Host $status

az network private-endpoint-connection approve --id $Connection.Id --description "Approved by FFA Titan Terraform"

}

}By running the Terraform code, we successfully created secure, private connectivity between Databricks Serverless SQL Compute and our Azure Data Lake Gen 2 account, as shown in the NCC status overview below:

This setup enables us to run SQL queries on external tables in Unity Catalog, with data files stored in FFA Titan's VNet. Automating this process with Terraform ensures that our platform remains ISO27001 compliant while leveraging a fully managed SQL environment.

For a secure and compliant Databricks Serverless SQL setup, follow these steps. Would you like assistance? Contact us for help with setting up secure and compliant Databricks environments!

Serverless SQL is usage-based, charging per second, making it cost-effective for variable workloads. Classic Compute involves hourly pricing and may incur costs even when idle.

Yes, Serverless SQL can support cross-region networking using Azure’s global VNet peering. This allows secure private traffic between regions. However, please consider additional costs.

Tooling

- Azure Subscription: Active subscription with necessary permissions.

- Azure Databricks: Workspace with Serverless SQL Compute enabled.

- Terraform: Installed locally or in CI/CD, compatible with Azure/Databricks providers.

- Azure CLI: Installed for managing Azure resources and approving private endpoints.

- PowerShell: For running the private endpoint approval script.

Variables

- Azure Subscription & Resource Group IDs: Required for resource deployment.

- Azure Region: Databricks and other resources' region (e.g., eastus).

- Databricks Workspace & NCC IDs: Workspace and Network Connectivity Configuration IDs.

- Storage Account ID: ID for the Azure Data Lake Gen 2 storage account.

- Tenant ID, Client ID, Client Secret: Credentials for Azure service principal.

Libraries

- Terraform Providers:azurerm: For Azure resource management.databricks: For Databricks configuration.

- Azure CLI Libraries: Support for az network private-endpoint-connection.

Permissions

- Azure RBAC: Contributor/Owner access to Azure resources.

- Databricks: Permissions to create/manage NCCs.

- Private Link Approval: Role permissions for approving private endpoints.

Networking Setup

- VNet/Subnet: Preconfigured VNet and subnet for VNet Injection.

- Private Endpoints: Enabled private endpoints in the target Azure region.

Secure Databricks Serverless Compute environment