Bootstrap Azure Subscription for DevOps

The food industry finds itself at the forefront of a digital transformation driven by data and artificial intelligence (AI) and is faced with new challenges. The pressure is on for the food industry to innovate and adapt. This blog explores Data and AI's role in digitally transforming the food industry and discusses challenges, fundamentals and applicability of AI in the food industry.

An organization in the food industry generates a lot of datapoints and actors within the food supply chain even more. Benefiting from Data & AI brings brings significant challenges when it comes to data diversity, data security, and change management.

In the food industry, the lack of standardized data formats and taxonomies limits data integration significantly. Data standardization defines common formats, structures, and naming conventions for integration and interoperability purposes. This involves standardizing data elements like product codes, unit of measure, and customer identifiers, along with common data models.

Implementing data standardization requires collaboration and coordination across departments and stakeholders within the organization. Cross-organization alignment requires industry-wide regulatory data standards. Food industry organizations may adhere to standards like GS1 for product identification and ISO 22000 for food safety management.

The benefits of data standardization extend beyond improved interoperability to enhanced data quality, consistency, and reliability. By implementing data standardization, organizations can streamline data integration processes.

Data breaches are manifesting themselves more and more. Protecting sensitive data is crucial for organizations. Data such as Bill of Materials, payment details, and customer information is classified. As organizations collect and store this data for purposes such as product optimization, data-driven pricing strategies, and product development.

Another challenge is the increasing sophistication of cyber threats targeting the food industry (e.g. the hack of Duvel Moortgat). Mitigating these risks requires organizations to invest in cybersecurity infrastructure, employee training, and incident response capabilities.

Consider investing in secure cloud-based infrastructure. Please note, going to a 'public cloud provider such as AWS or Azure does not mean your environment is secure by default and can securely interchange data with other parties just because it is 'in the cloud'. you need to make it secure through data encryption for both data-at-rest and data-in-motion whilst integrating it with your existing landscape.

Resistance to change is natural for us. It often stems from concerns about job displacement and unfamiliarity with new technologies. To address this, leaders must champion digital transformation, leading by example and emphasizing the value of data-driven approaches.

To overcome resistance, organizations should encourage innovation and teamwork while prioritizing ongoing learning. Communication strategies should be implemented to emphasize the benefits of digital transformation and equip employees with the necessary skills for success.

Investment in employee training programs is crucial to ensure that staff possess the skills needed to leverage data effectively. By emphasizing that digital transformation enhances human capabilities rather than replacing them, organizations can take away concerns and get buy-in.

After having solved the organization's challenges you not done yet. You are just gettings started. To truly benefit from Data & AI an organization must master the fundamentals of master data, data registration, data quality, and data processing with a robust Data & AI infrastructure.

The quality of master data is crucial to ensure the accuracy and reliability of decision-making processes. Master data refers to data entities that are essential to an organization (e.g. product information, customer profiles, and Bill of Materials).

Effective master data management begins with identifying and defining key data entities. This involves defining standard data models and structuring master data. By establishing data definitions and formats, organizations ensure consistency and interoperability across different systems.

Once the data entities have been identified, the next step is to implement data governance processes.

Maintaining master data is one thing. But it's equally important to have accurate and consistent source data registration. This involves capturing and recording data at its origin. Whether it's generated internally or obtained from external sources.

To effectively manage source data registration, organizations should implement standardized data entry protocols and validation checks. This enforces data quality at the point of capture (e.g. MES, ERP, CRM, and FINANCE). It may involve defining mandatory fields, format requirements, and validation rules.

Accurate and structured data input is essential for ensuring the integrity and reliability of downstream processes. Without it organizations risk introducing issues into their datasets. Which can have implications for decision-making and business operations.

High data-quality provides a foundation for decision-making and analysis. Poor data quality, on the other hand, can lead to erroneous conclusions, misinformed decisions, and wasted resources.

The first step in maintaining data quality is to define clear standards and criteria for what defines high-quality data. It comprises preventive and corrective measures. Preventive measures identify and address data quality issues at the source, while corrective measures cleanse existing data.

Implementing data quality controls and validation rules is crucial for data quality management. Enforcing these in the data lifecycle enables organizations to detect and prevent errors before they flow downstream (e.g. faulty product prices being used in the invoice process).

A robust data infrastructure comprises the hardware, software, and networking that enable ingestion, storage, processing, and analysis of data.

At the heart of a data infrastructure is the data & analytics platform. It is the repository for storing and organizing data and can handle structured, semi-structured, and unstructured data.

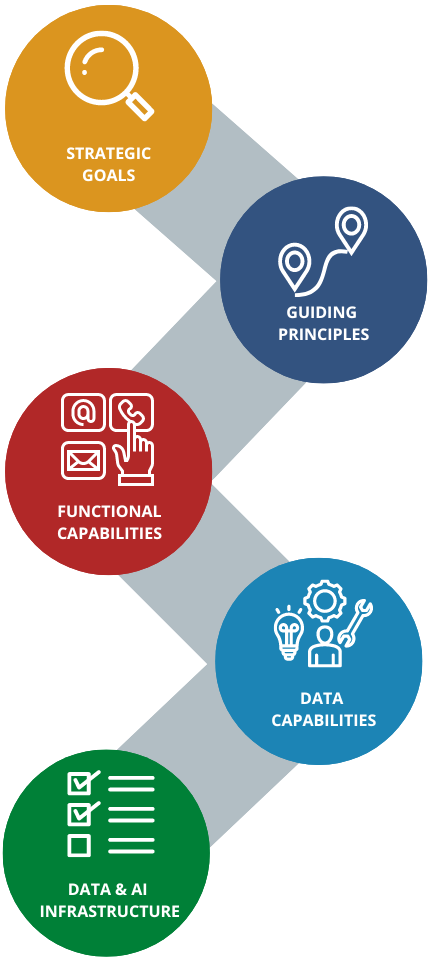

To define your Data & AI platform it is imperative that you start with defining your strategic goals, current and future use cases.

Based on your strategic goals deduce guiding principles for your platform such as build-over-buy, event-over-batch, calculate-once-use-many.

Take those guiding principles and convert hem into functional capabilities for the platform. Those functional capabilties help you in identifying the data capabilities your platform needs to enable them.

Finally, based on the data capabilities you identify, select and build the Data & AI architecture for your platform. Providing you with scalability and flexibility for current and future use cases. Allowing you to reach your strategic goals.

Artificial Intelligence in the Food Industry can be categorized into four main AI application areas. Them being; Machine Learning, Natural Language Processing, Robotics, Computer Vision.

Traditional maintenance practices in food processing rely on scheduled maintenance intervals or reactive repairs after equipment fails. Predictive maintenance uses sensor data and equipment diagnostics to monitor machinery, preempting major issues before they occur.

Predictive maintenance uses sensor-equipped machinery to monitor its health and performance. Machine learning algorithms analyze this data to detect patterns and anomalies indicative of potential equipment failures. By correlating sensor data with maintenance- and failure-data, predictive maintenance systems can identify early signs of failures.

For example, If a predictive maintenance system detects a deviation in a conveyor belt motor, it may trigger an alert. Prompting the team to inspect the motor and address any issues before they escalate. By addressing potential problems early, food manufacturers can avoid costly downtime, maintain consistent product quality, and minimize product waste.

Traditional demand forecasting methods rely on historical sales data and basic statistical techniques to project future demand. These Methods may miss seasonality, trends, and external factors affecting consumer behavior and purchasing patterns (e.g. FIFA World cup, Omlympic games).

Predictive analytics uses machine learning to identify accurate demand forecasts by spotting patterns and trends. it analyzes sales history, demographics, and more for accurate predictions in demand forecasting.

Predictive analytics optimizes inventory levels by identifying demand trends, reducing stockouts, minimizing costs, and improving cash flow.

Quality control is essential in the food industry. Computer vision analyze visual data, using cameras and algorithms to detect defects, anomalies, and quality deviations in food products.

One use case of computer vision is the use of inspection systems to detect defects in fruits and vegetables. By analyzing images of produce in real-time it allows manufacturers to remove defective products from the production line before they reach consumers. This reduces the risk of recalls and protects brand reputation.

Computer vision systems are also used to inspect packaged food products. By analyzing images of product labels, barcodes, and packaging materials, computer vision algorithms can verify product authenticity. And in addition, expiration dates, and packaging integrity. This ensures compliance with regulatory requirements and quality standards

In the food industry personalization meets consumer demands for tailored products and experiences. This is achieved through AI and machine learning. Recommendation systems personalize experiences by suggesting products tailored to individual preferences and purchase histories.

AI-powered personalization also extends to menu customization. Analyzing customer data enables restaurants to customize menus, meeting patrons' preferences and dietary restrictions. This not only enhances the dining experience for customers but also improves customer satisfaction and loyalty.

AI helps in product development by analyzing trends, insights, and preferences, enabling organizations to create targeted innovations. For instance, AI algorithms analyze social media data, identifying popular food trends and flavor profiles, helping organizations anticipate consumer demand.

The integration of data and AI is a great opportunity for the food industry. We addressed key aspects such as building data infrastructure, tackling challenges and exploring AI applications.

Building a strong foundation for data & analytics is pivotal. We must ensure that our data is of high quality and well-organized to derive actionable insights. But we also recognize challenges, such as dealing with complex data and ensuring data privacy. And last but not least, coping with change within our organizations.

Fortunately, there are strategies we can employ to overcome these challenges by mastering fundamentals such as data standardization, data encryption an a clear vision and communication on the topic of digital transformation and investing in a modern data infrastructure enables data-driven decision-making..

There is still lots of room for exploration of data & AI within the food industry. From implementing precision agriculture techniques to optimizing supply chain operations. Don't let data overwhelm you, utilize it and benefit form it!

Organizations can measure ROI by comparing key performance indicators before and after MDM implementation.

Consider factors such as scalability, flexibility, security, and cost. On-premises solutions offer greater control but require upfront investment. Cloud solutions provide scalability and agility, allowing organizations to scale resources on-demand.

Some barriers to adoption of automation technologies include job displacement. Due to a lack of awareness or understanding of automation capabilities, and resistance to change from employees. Organizations can remove barriers by emphasizing the role of automation as a tool rather than replacing them.